A guide to practicing Open Science with Sysrev Part 2: Data reuse

In Part 1 of this guide, we shared practical tips for using Sysrev’s data sharing features to make the underlying data from a systematic review or other document review project openly available to the research community. Data sharing not only promotes transparency and accountability in research—it also enables others to leverage existing work, reduce duplication of effort, and accelerate discovery. By allowing researchers to build on shared datasets rather than starting from scratch, open data practices help mitigate research waste and strengthen the replicability and reproducibility of findings.

In Part 2, we turn to Sysrev’s data reuse capabilities, which make it easier to access, repurpose, and extend data shared in existing projects. These features support the reuse of not just eligibility decisions and extracted data, but also a project’s data extraction framework—including labels, label descriptions, answer options, and label settings. This allows researchers to maintain consistent data structures across projects, improving comparability and interoperability. Over time, this consistency contributes to a growing ecosystem of structured, reusable metadata, laying the groundwork for more connected, cumulative, and efficient evidence synthesis.

Below are some of the Sysrev data reuse features and best practices for reusing Sysrev data.

Exporting datasets from public projects

From any public project Sysrev project, you can export the data, either in Endnote XML format or a multi-tabbed Excel file containing all reviewer labels and article metadata. With these data you can conduct your own analyses or reproduce the results of a published systematic review. For more about exporting data, visit our Knowledge Base.

Building on existing reviews with clones and child projects

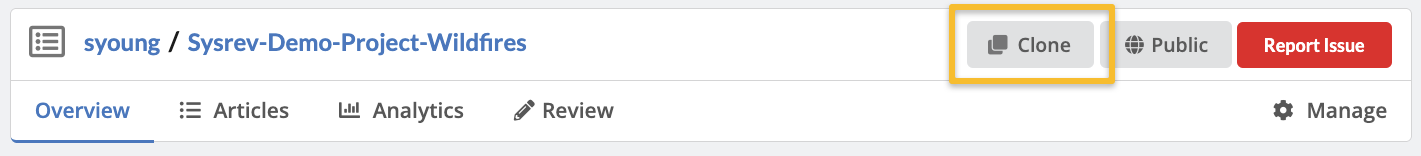

Copies of public Sysrev projects can be easily created using the Clone or Child Project features. These tools let you build on existing reviews by reusing or refining their content.

Clones replicate an entire project framework, including its labels. This is useful when a previous review has a well-developed data extraction scheme that fits a new or updated review on a similar topic. Simply use the Clone button to duplicate the label structure and project setup.

Child projects allow you to create new reviews from a filtered subset of articles in an existing project. For example, you can isolate studies on a specific intervention from a previous review, then create a new project to explore different outcomes or extract new data. You can also add new search results to extend the original review. Visit our Knowledge Base to learn more about creating child projects.

Label reuse

Sometimes, a single label from a previous review can be useful in a new project. Since labels—especially complex categorical or group labels—can be time-consuming to recreate, you can use the label import feature to easily copy an existing label into your new review. Reusing the exact label also helps ensure consistency and allows you to build directly on the previous review’s dataset.

Providing credit

When you’ve reused data, labels or a project framework, you should cite the original Sysrev project in your work. Ideally, the project owner will have provided a suggested citation in the description. If not, do your best to include as much information as possible in your citation, including the names of the review author(s), year of the published review, name of the Sysrev project and the URL from the Overview page.

In Part 3 of this article, we’ll highlight some real-world examples of how Sysrev has been used in open science practice.