How to use the Auto-labeler

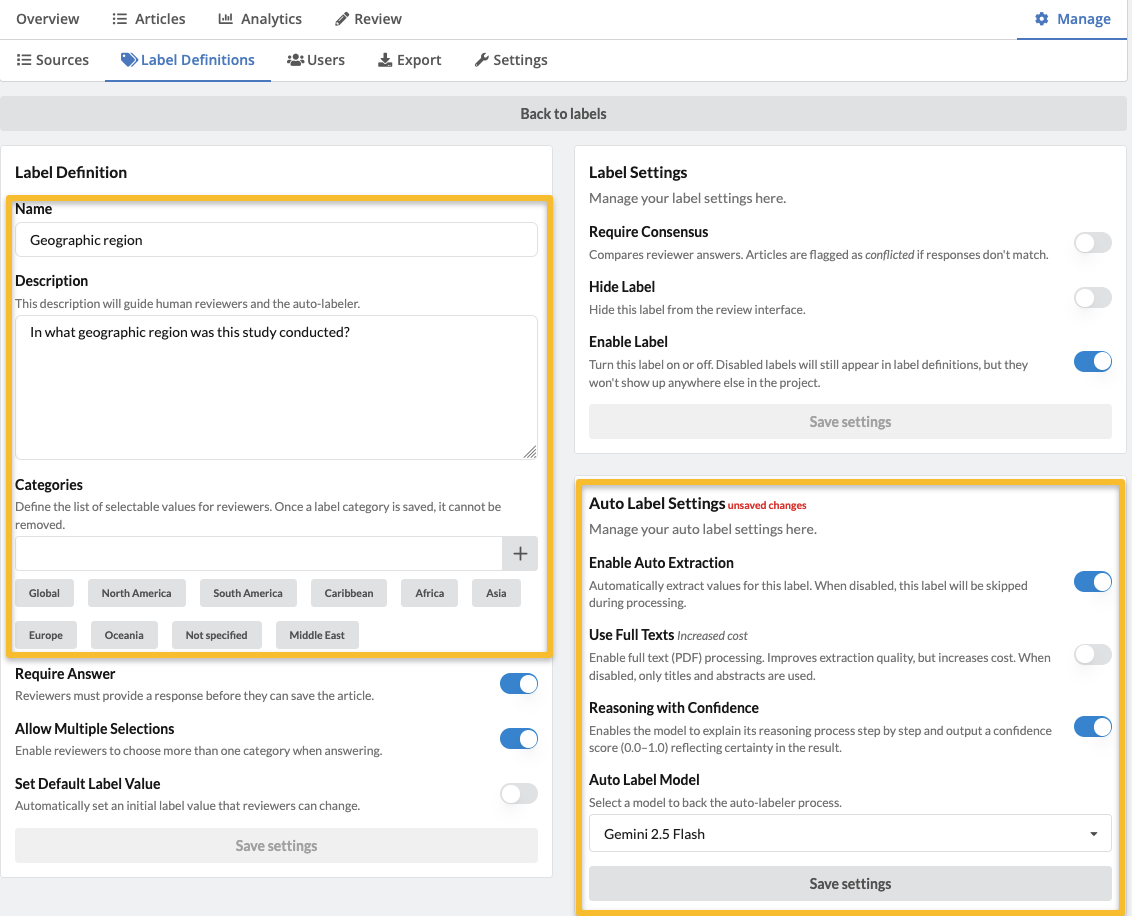

Your label description acts as the generative AI prompt that is used by the Auto-labeler to apply label answers to each record. For categorical labels, the Auto-labeler will also access the Categories to retrieve its answers. To set up your labels for auto-labeling, go to Manage -> Label Definitions. Click on the gear icon for an existing label to edit it or create a new one by clicking on the Create New Label button.

1. Set your label description prompt and Auto-label settings

In this example, we have created a categorical label and want the Auto-labeler to answer the question "In what geographic region was this study conducted?", providing the following answers: Africa, North America, Caribbean, Asia, Central America, South America, Oceania, Europe, Middle East, not specified.

Note: We included a 'not specified' option, but if we didn't, the Auto-labeler would be forced to select an answer, even if incorrect. We could turn off "Require answer", allowing the auto-labeler to skip the article if no answer is appropriate.

In this example, we have selected the following Auto-labeler options:

- toggled ON the Enable Auto Extraction so that the Auto-labeler runs on this label;

- toggled OFF Use Full Texts, so the Auto-labeler only looks at the metadata (title and abstract) and not any attached PDFs;

- toggled ON Reasoning with Confidence, to enable the built-in chain-of thought reasoning questions that can be added to the prompt.

Note: The Reasoning with Confidence will add a set of built-in reasoning steps (known as 'chain-of thought' prompting) to your prompt and will likely impact Auto-labeler performance. It is useful to run your prompt with and without this feature selected to determine which setting works better in your case.

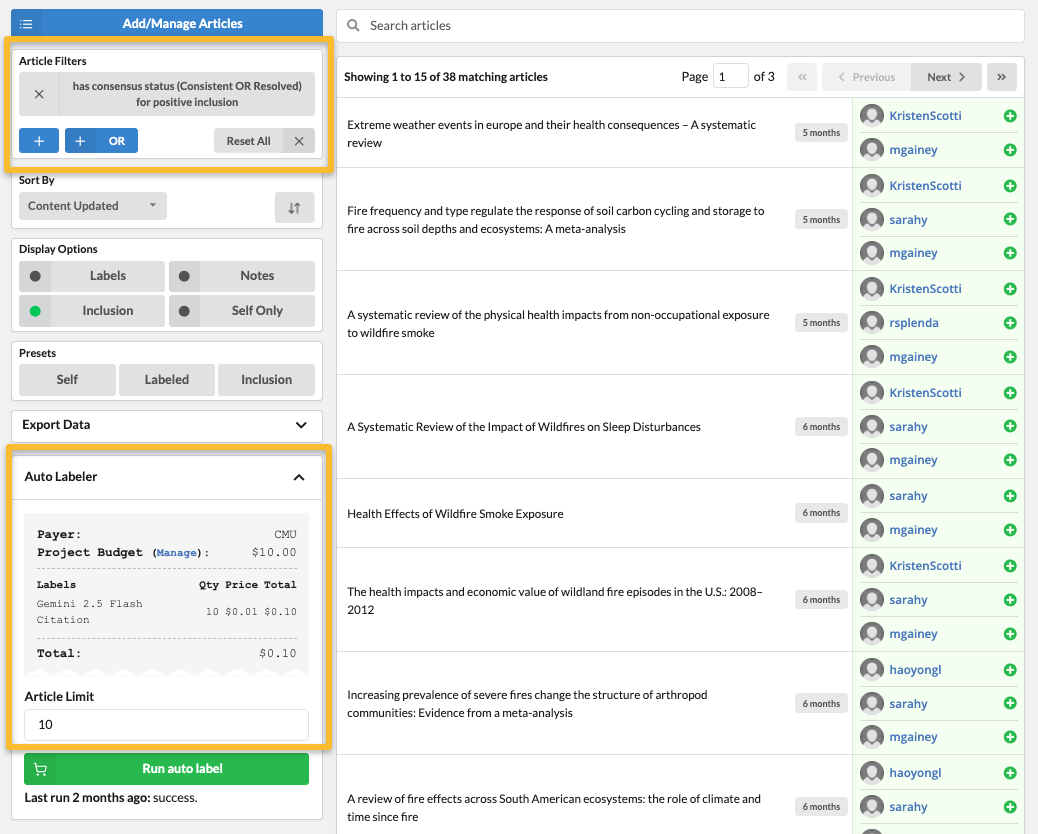

2. Set up your article filter and Auto-label limit

After clicking Save, we can go back to the Articles tab to set the articles that we want to Auto-label. In this example, we filtered for only records that have been included by two reviewers. We set our Max Articles (the number of records we want the Auto-labeler to label) to 10. You can see that the Auto-labeler has provided a breakdown of the cost and estimated the cost of this run to be $0.05

When you are ready, and your selection does not exceed the budget in your account, click the Run Auto-label button. Upon successful completion, the message "Last run just now: success" will appear at the bottom of the Auto-labeler box. To view Auto-label answers, click on one of the labeled articles from the list and scroll down. Below the article abstract you will see the Auto-label answers. In this example, you can see that the Auto-label identified ecological and environmental impacts in this study record. It also included this record with 80% certainty.

Note: If instead of Last run just now: success, there is an indication that some of the run failed, simply click the Run Auto-label again. It will only run again on the failed items and often will succeed on a second try.

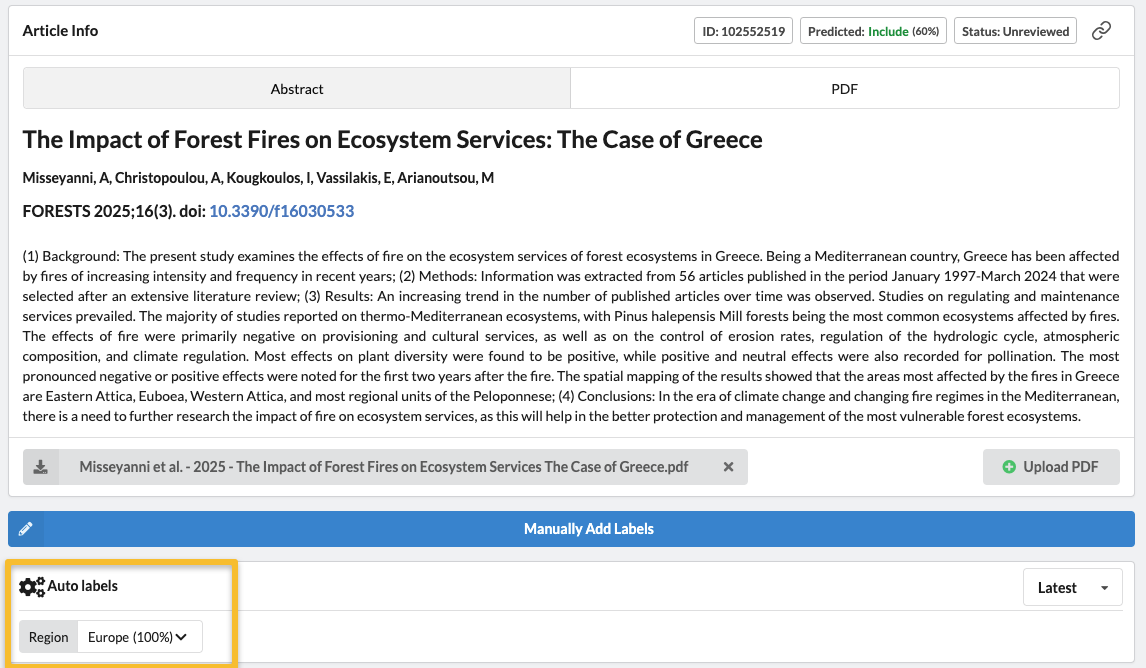

3. Review auto label answers

Once you run the Auto-labeler, you will see Auto-label answers at the bottom of each record as shown in the image above. If you enabled "Reasoning with Confidence", you can view the Auto-labeler's reasoning process by clicking on the dropdown arrow next to the Auto-label answer.

In this example, we can see that the Auto-labeler selected Europe with 100% certainty in its answer.

You will also now also see an Auto-label Report, located at the bottom left of the Overview page of your project. This report provides detailed analytics of the Auto-label answers in comparison to reviewer answers. See more about the report on the Understanding the Auto-label Report page.

You can also have the Auto-labeler prefill the labels in the Review tab. To learn more, visit our Prefill label answers help page.

For a recommended process for testing and revising your prompt, check out our Suggested Workflow for Testing and Running Auto-labels page.

Pro tip: For tips and tricks on optimizing your prompts, check out the Tips for Optimizing your Auto-label Prompts page.